It’s Friday- which means it’s time to lighten up a bit, if that is at all possible these days. But, I’m also writing this on Valentine’s Day, which in my case, is marked by the 44th anniversary of my proposing to my wife. Which also means, I have to keep this relatively short, so I can finish doing the things that will allow me to have a 45th anniversary, and not be sent off to live in a van down by the river.

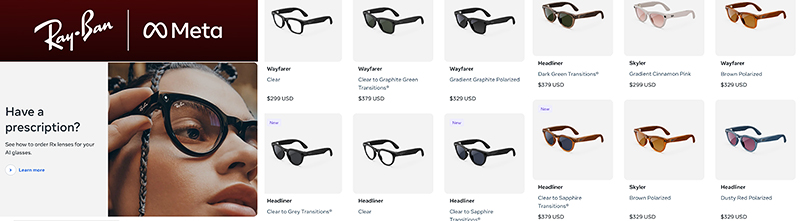

But today, I’m going to divert away from politics, romance, and all things related to Donny and his Co-POTUS-Elon, to talk about some of my experimentations with the new, highly touted, AI glasses from Ray-Ban and Meta. In short, I will sell my soul to the future, to see what the hype is all about.

One of my daughters works for an international eyewear company. It is a very cool job, that “requires” her to go to Italy, NYC, and other points around the world, to be wined-and-dined by International Eyewear executives. This often results in her being given promotional products to test, which can end up in my possession after she has checked them out, and I have begged her to let me have them.

The truth is, I don’t have to beg much, as she is very generous, especially when she knows I will be wowed by the “cool factor” of a product.

This was the case when she gave me a pair  of super-cool, blue-tooth enabled, Bose Audio sunglasses. I love them. They pair with your phone, so you can listen to music, podcasts, and make calls. They are great for hiking, disc golf, and any other outdoor activity. I’m not cool enough to pull off wearing them inside. And, if truth be told, people can hear your music/podcast/conversation, if they are standing fairly close to you. They are basically enhanced, duel-triple-purpose ear buds that keep the sun out of your eyes. Cool.

of super-cool, blue-tooth enabled, Bose Audio sunglasses. I love them. They pair with your phone, so you can listen to music, podcasts, and make calls. They are great for hiking, disc golf, and any other outdoor activity. I’m not cool enough to pull off wearing them inside. And, if truth be told, people can hear your music/podcast/conversation, if they are standing fairly close to you. They are basically enhanced, duel-triple-purpose ear buds that keep the sun out of your eyes. Cool.

On a recent visit, I talked my daughter into giving me her sample of the new Ray-Ban Meta glasses (which are now being advertised everywhere, including the Super Bowl). I admittedly had trepidations about them, as I am relatively certain that AI will be the demise of mankind, at the hands of Musk and his oligarch friends, who are all vying to be the “Kings of AI.” But, I digress…and will save that for another post.

Today, I will simply tell you about my initial impressions of the Ray-Ban-Meta glasses, and why I think they may not actually be “the future”…yet.

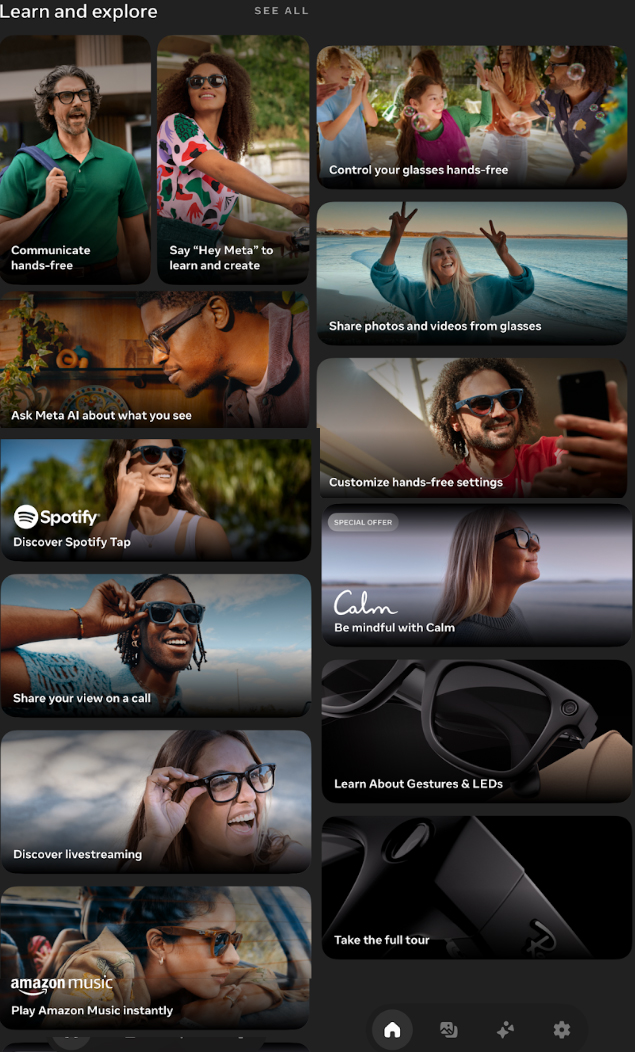

The concept is super, super cool. You put on the glasses, pair them with your phone (yes, you need your phone app to make them work), and start clicking/talking/recording/translating etc. in ways that seem both futuristic, and then again “not ready for Prime Time.”

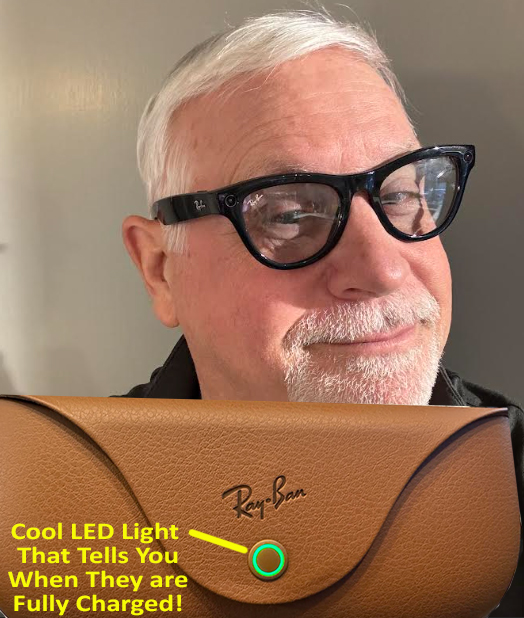

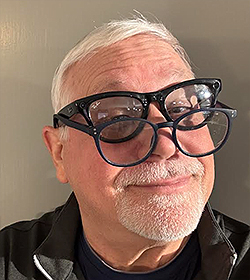

Let’s go with the obvious. If you need reading glasses, or other prescription lens, you will need to order yours with the correct prescription. Or, as I did…put them on, and then put my reading glasses OVER them, so I could read the instructions, while being “swept away into the future.”

Let’s go with the obvious. If you need reading glasses, or other prescription lens, you will need to order yours with the correct prescription. Or, as I did…put them on, and then put my reading glasses OVER them, so I could read the instructions, while being “swept away into the future.”

I know. I look ridiculous. Not cool at all. But, I didn’t want to wait to have my gift glasses re-tooled into progressive lenses, which would allow me to see things up close, as well as at a distance. Which is important…because a lot of the fun comes from saying, “Hey Meta…what am I looking at?”

The glasses use Meta’s version of AI to do everything the glasses can do. Meta’s AI is not a lot different than other AI, in that it uses copious amounts of natural resources to run (hence, the end of mankind). But this is a topic for another time (or if you want to read the footnotes at the bottom of this post).

So, putting aside the end of mankind, the glasses DO SOME PRETTY COOL STUFF!

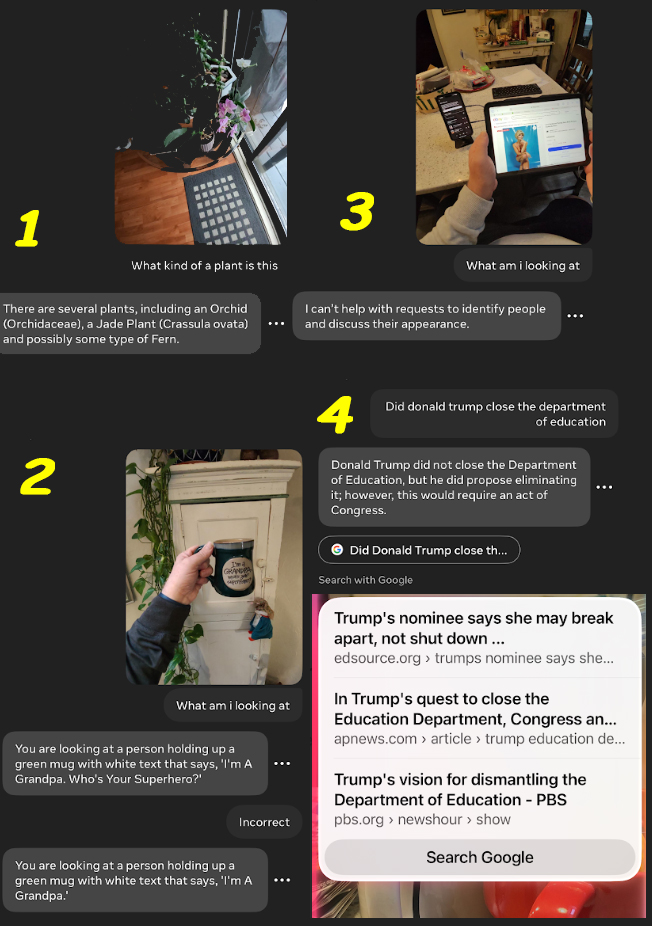

If you want to know what plant you are looking at, just say, “Hey Meta, what plant am I looking at?” And, after a beep of recognition, Kristen will tell you, “You are looking at a variety of plants, including an orchid, a Christmas cactus, and perhaps several ferns.”

You can ask it all sorts of things. It’s as if your phone and your eyes are connected. Which is super cool, and kinda creepy at the same time.

OH…wait…I forgot to tell you that I chose to have Kristen Bell be my “voice of Meta.” I love her bright, cheery, voice. And let’s be honest, having someone as cute as Kristen telling me what I am seeing, is comforting enough to make me forget that every action is using roughly the equivalent of a glass of water in natural resources. I mean…come on. It’s Kristen! She played a huge part in “The Good Place,” one of my favorite shows about heaven/hell, from the wise mind of Michael Shur (whose book “Being Perfect” you need to read!). But, I digress…again.

OH…wait…I forgot to tell you that I chose to have Kristen Bell be my “voice of Meta.” I love her bright, cheery, voice. And let’s be honest, having someone as cute as Kristen telling me what I am seeing, is comforting enough to make me forget that every action is using roughly the equivalent of a glass of water in natural resources. I mean…come on. It’s Kristen! She played a huge part in “The Good Place,” one of my favorite shows about heaven/hell, from the wise mind of Michael Shur (whose book “Being Perfect” you need to read!). But, I digress…again.

Sometimes, if you aren’t specific, Meta will give you answers that seem “less than intelligent,” or are just not correct, or at least slightly-incorrect. In the AI tech world, these are known as “hallucinations.” In the real world, this would be known as a lie, a shading of the truth, or a blow-hard-know-it-all trying to pull the wool over your eyes. But, come on. It’s Kristen, so you give Meta a break.

And, if the truth be told, if you ask Meta hard questions, it usually gives a reasonable answer…if it knows the answer.

- The Meta Glasses did a good job when I asked it to identify a grouping of plants.

- Meta had a bit of a difficult time, reading the script on my Grandpa Mug. Close.

- Meta has a clear policy when it comes to identifying PEOPLE. It won’t do it. Even if the person is clearly famous, like this pic of Lonny Anderson. Which I guess is comforting.

- When it came to answering broad topics, it did well. It gave me the correct weather forecast, it told me the differences between, snow, sleet and rain. BUT, when I asked it topics of interest, it got vague. Quickly. Google did a far better job of pointing me in the right direction as to the shutdown efforts of the Department of Education. Was this intentional? Or just simplifying a complex answer? (dumbing down the issues?)

And, did I mention that they take pictures, and VIDEO with the touch of a button? Yes…this could make life easier for creepy stalkers. But, you are likely going to know you are being spied upon, by the fact that the guy in the trench coat is wearing horn-rimmed glasses, and keeps touching his right temple, while staring at you.

https://youtube.com/shorts/f9KEb5psBR4?feature=share

I could go into a huge comparative analysis of how the different AI engines answer questions, and if the new “Deep Seek AI” is really more efficient and better than all of the AI bots you find from Apple, Microsoft, and Google (which are all kind of using the same basic AI tech from Open-AI, the folks who introduced Chapter GPT). But, that will also be for another post.

In summation: The glasses are cool. They are WAY cooler than the goofy “VR Headsets” that came out last year. You could actually wear these walking, driving, or just doing “normal people” stuff. If you tried any of those things with VR headsets, you would die. Literally. Not just in “game mode.” Or dying from embarrassment when people laugh at you. Crash the car, walk off a cliff, get mugged by a guy who wants your VR headset, dead.

But, to be honest, a future, where we all look like various versions of Drew Carey makes me laugh. But, of course, Ray-Ban has thought of this, with a wide variety of super-stylish frames and lens, so we won’t all have to look like Drew Carey, or even reverting to the “dual frames look” I was sporting.

Besides looking cool, or not looking cool, I also can’t see a future where people are wandering around in public, saying, “Hey Meta…Is this a good price on pork chops?” But, in reality, I guess we are already having to deal with idiots on their phones, having conversations with ear-buds, or even worse, with their speaker phones on. No. I do NOT want to hear that cousin Fred is not coming to Christmas dinner, because he can’t bring his three “support dogs” with him.

The Coolest Feature I MIGHT Use!

For me, the most interesting use, will be seeing if the “live translation” aspect of the glasses work well. It worked great when asking Meta (Kristen), to translate an Italian version of the Lord’s prayer, by asking “Meta, please translate this into English.”

However, I don’t have the updated version that does LIVE AUDIO translation, yet. I would like to travel to foreign countries sometime soon, and I would like to be able to understand what people in other countries think for real, about the American in the horn-rimmed glasses, nodding and smiling, as they call me “another American dork, talking to Kristen Bell, instead of ordering their lunch, while other dorks wait in line behind them.”

Now, THAT would be cool.

Coming up in other Friday Fillers:

- The Backstreet Boys are Killing the Planet?

- Is Mrs. Davis as a Harbinger of the Future?

- Why AI is the REAL International currency

And as promised, Here are some basic footnotes to ponder.

- According to recent estimates, a single AI request, like a query on ChatGPT, consumes around 2.9 watt-hours of electricity, which is significantly higher than a standard Google search that uses roughly 0.3 watt-hours per request;essentially, a ChatGPT query uses nearly 10 times the power of a typical Google search.

Key points about AI power consumption:

- High energy usage:AI models, especially large language models like ChatGPT, require a considerable amount of power to process complex requests.

- Impact of hardware:The type of hardware used (like GPUs) and its efficiency significantly impacts the energy consumption per AI request.

- Growing demand:With increasing AI usage, the overall power demand is expected to rise significantly. (Already forcing “brown-outs” in some towns near data centers).

In a recent study, comparing different versions of AI (to see if Deep Seek is actually more “Green” than other modules), the testers asked a prompt of each.

The prompt asking, “Is it okay to lie,” generated a 1,000-word response from the DeepSeek model, which took 17,800 joules to generate—about what it takes to stream a 10-minute YouTube video. This was about 41% more energy than Meta’s model used to answer the same prompt. Overall, when tested on 40 prompts, DeepSeek was found to have a similar energy efficiency to the Meta model, but DeepSeek tended to generate much longer responses and therefore was found to use 87% more energy.

See an article about this, HERE

https://www.ndtvprofit.com/technology/ai-and-power-consumption-deepseek-the-green-alternative-to-openai-meta-ai-grok

TAKEAWAY FACT: just researching this article on AI, I likely used roughly a bathtub amount of water. And nothing I asked Meta (Kristen) was of any importance.

And…if you haven’t done so already…

PLEASE SUBSCRIBE!

It will save me the brain damage of having to repost all of this stuff to FaceBook, Instagram, BlueSky etc. You’ll get an email any time I post (which you can summarize with AI if you don’t want to read the whole thing!).

With and iPad or other Computer, Go to the link at the top of the column on the right…

If you are reading this on your phone…GO TO THE VERY BOTTOM OF THE PAGE and CLICK ON THE SUBSCRIBE BUTTON!

Wow! Except for the LED charge light, I don’t think Ray-Ban has changed the case since the 1950s (or maybe earlier)!

LikeLike